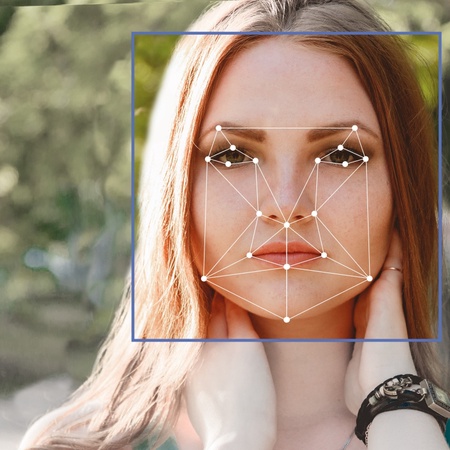

White faces generated by artificial intelligence (AI) now appear more real than human faces, according to new research from The Australian National University (ANU) and the University of Aberdeen.

In the study, more people thought AI-generated White faces were human than the faces of real people. The same wasn’t true for images of people of colour.

The reason for the discrepancy is that AI algorithms are trained disproportionately on White faces, Dr Amy Dawel, the senior author of the paper, said.

“If White AI faces are consistently perceived as more realistic, this technology could have serious implications for people of colour by ultimately reinforcing racial biases online,” Dr Dawel said.

“This problem is already apparent in current AI technologies that are being used to create professional-looking headshots. When used for people of colour, the AI is altering their skin and eye colour to those of White people.”

One of the issues with AI ‘hyper-realism’ is that people often don’t realise they’re being fooled, the researchers found.

“Concerningly, people who thought that the AI faces were real most often were paradoxically the most confident their judgements were correct,” Elizabeth Miller, study co-author and PhD candidate at ANU, said.

“This means people who are mistaking AI imposters for real people don’t know they are being tricked.”

The researchers also discovered shy AI faces are fooling people.

“It turns out that there are still physical differences between AI and human faces, but people tend to misinterpret them. For example, White AI faces tend to be more in-proportion and people mistake this as a sign of humanness,” Dr Dawel said.

“However, we can’t rely on these physical cues for long. AI technology is advancing so quickly that the differences between AI and human faces will probably disappear soon.”

The researchers argue this trend could have serious implications for the proliferation of misinformation and identity theft, and that action needs to be taken.

“AI technology can’t become sectioned off so only tech companies know what’s going on behind the scenes. There needs to be greater transparency around AI so researchers and civil society can identify issues before they become a major problem,” Dr Dawel said.

Raising public awareness can also play a significant role in reducing the risks posed by the technology, the researchers argue.

“Given that humans can no longer detect AI faces, society needs tools that can accurately identify AI imposters,” Dr Dawel said.

“Educating people about the perceived realism of AI faces could help make the public appropriately sceptical about the images they’re seeing online.”

Dr Clare Sutherland from the University of Aberdeen who co-authored the paper added “As the world changes extremely rapidly with the introduction of AI, it’s critical that we make sure that no one is left behind or disadvantaged in any way - whether due to their ethnicity, gender, age, or any other protected characteristic.”

The study was published in Psychological Science, a journal of the Association for Psychological Science

ENDS

Notes to Editors